Now Reading: OpenAI Runs Back to Scaling Laws as Google Pushes It Into ‘Code Red’

-

01

OpenAI Runs Back to Scaling Laws as Google Pushes It Into ‘Code Red’

OpenAI Runs Back to Scaling Laws as Google Pushes It Into ‘Code Red’

OpenAI marked the third anniversary of ChatGPT at a moment when the AI industry has become highly competitive. Google’s release of Gemini 3 and Nano Banana Pro has prompted the company to adjust its focus, with CEO Sam Altman on Monday directing employees to accelerate product development and look at releasing a new reasoning model next week.

In a Slack memo, as reported by The Information, Altman declared ‘Code Red’, an internal alert signalling that a competitor’s development potentially affects its market position, and hence the teams will have to reprioritise their resources.

It is not solely Google whose activities are a source of concern for OpenAI. Chinese AI lab DeepSeek recently launched two new reasoning models, DeepSeek-V3.2 and DeepSeek-V3.2-Speciale, which rival Gemini 3.0 Pro and equal GPT-5.1 in performance. Amazon, too, launched its new Nova models at AWS re:Invent 2025.

In the memo, Altman said that projects tied to advertising, AI health and shopping agents, and the personal assistant known as Pulse will be pushed back. He also encouraged teams to consider temporarily relocating talented team members to priority areas, and mentioned that a daily check-in will be organised for those working on ChatGPT improvements.

It’s interesting to see OpenAI shift its focus. Usually juggling many big research projects, this change highlights how the company is now prioritising practical, reliable, and profitable products.

What is Garlic?

OpenAI is reportedly working on a new model called Garlic. The model is meant to compete with Google’s Gemini 3 and Anthropic’s Opus 4.5, particularly in coding and complex reasoning.

However, the company did not respond to AIM’s request for comments on the development.

The Information reported that initial internal results indicate strong performance, with a possible release as GPT-5.2 or GPT-5.5 in early 2026.

According to a report by SemiAnalysis, OpenAI hasn’t completed a full-scale pretraining run for any next-generation model since GPT-4o launched in May 2024. It says GPT-5 is essentially a heavily fine-tuned version of the old 4o foundation rather than a true new generation.

A report by Epoch AI notes that GPT-5 used less training compute than GPT-4.5 because OpenAI shifted its approach to scaling post-training rather than running larger pre-training cycles.

Users on social platforms have pointed towards the company for not addressing technical challenges, including the copyright issues over its video generation tool Sora.

Despite these challenges, OpenAI continues to lead in user adoption. According to Similarweb, Gemini saw 1.182 billion visits in October 2025, while ChatGPT reached 6.165 billion. However, Gemini users spend more time per visit than ChatGPT users.

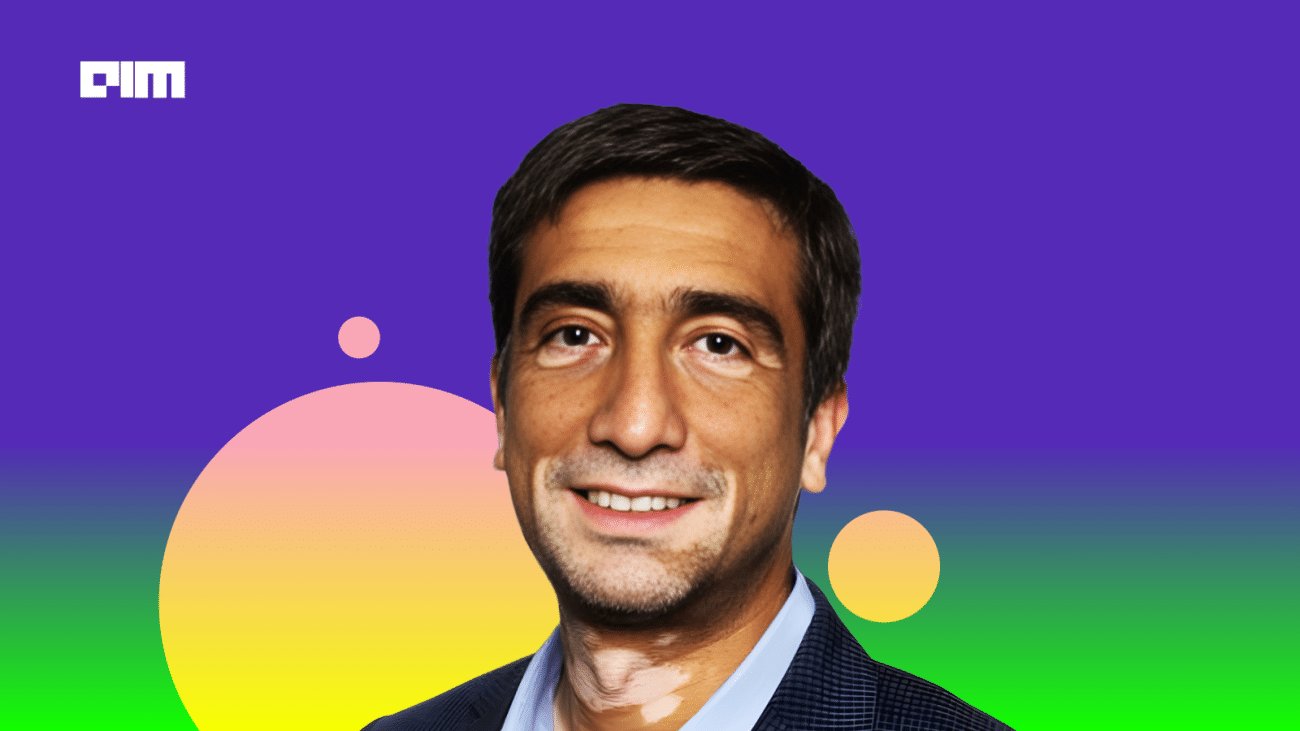

“Today, ChatGPT is the #1 AI assistant worldwide, with around 70% of assistant usage,” OpenAI’s head of ChatGPT, Nick Turley, wrote in a post on X.

Turley emphasised the growing role of ChatGPT in search, dominated by Google, calling it one of the company’s biggest opportunities. “ChatGPT now accounts for roughly 10% of search activity, and it’s growing quickly.”

Scaling is Not Dead

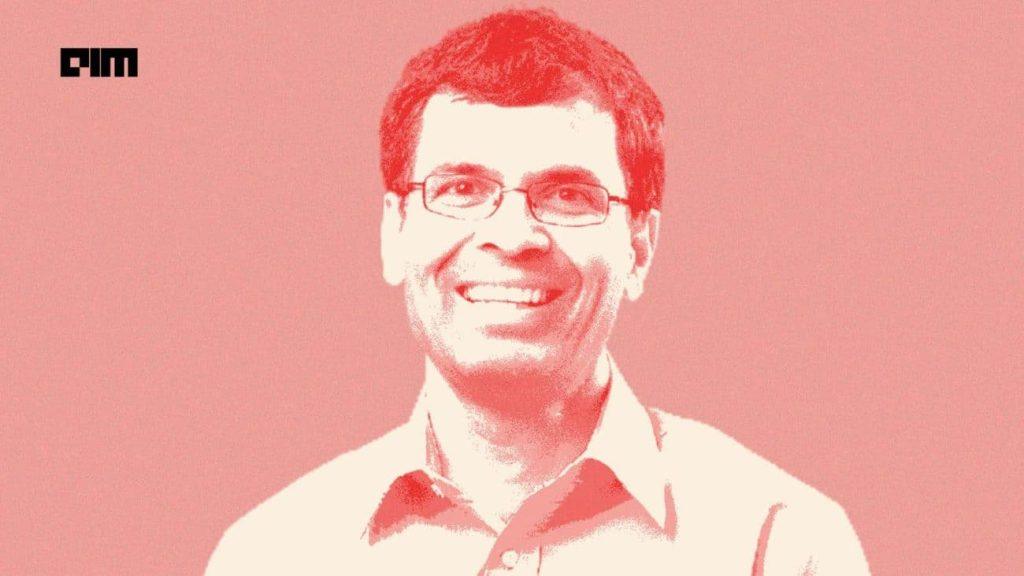

OpenAI researcher Mark Chen, in a recent podcast, said that the company remains confident about the performance of its upcoming model despite stiff competition from Google’s Gemini 3. He acknowledged Google’s progress but added that internal OpenAI models are already performing at a similar level.

Chen mentioned that the company has dedicated the past two years to significantly enhancing reasoning capabilities. But in doing so, it “lost a little bit of muscle” in other crucial areas such as pre-training and post-training. Now, he mentioned, the company is rebuilding that strength and seeing significant gains as a result.

Addressing growing speculation that the limits of large-scale training have been reached, he pushed back firmly. “A lot of people say scaling is dead. We don’t think so at all,” he said, adding that OpenAI still sees “a lot of room” in large-scale pre-training. The company, he said, has already begun training “much stronger models” as a result of this renewed focus.

OpenAI should have enough compute resources, and it recently struck compute deals, including a multi-billion-dollar partnership with AWS.

Its annual revenue is set to hit $20 billion by year-end. According to an HSBC update cited by the Financial Times, ChatGPT is projected to reach 3 billion weekly users by 2030, with more than 220 million of them paying subscribers.

OpenAI may be growing fast, but HSBC warns it could still remain unprofitable by 2030 and will need over $200 billion in compute to sustain its plans.

As Pedro Domingos, professor emeritus of computer science and engineering at the University of Washington, joked, “just as it was about to go bankrupt – OpenAI stumbled on AGI.”

With new models and recent compute deals, the company is hoping for a comeback, much like Google.

The post OpenAI Runs Back to Scaling Laws as Google Pushes It Into ‘Code Red’ appeared first on Analytics India Magazine.